I have always been a fan of the oracle SQL trace facility. It’s a very powerful method to analyze performance problems, especially if you don’t know the application very well. If you don’t know oracle SQL tracing yet, you will find some info at orafaq and some more details at orable-base. Obviously, Oracle has also some documenation about it.

In short, the Oracle SQL tracing facility causes the database server to write all executed SQL statements to a file for later analysis. One of the main issues with SQL tracing is the huge size of the trace files; every statement execution is logged, potentially with additional data about the execution plan, wait events, and bind values.

This article introduces the new possibilities of Oracle 10g to enable tracing on a very fine level. With a properly instrumented application, tracing can be enabled for specific users or program modules only. With such a fine control over the tracing, the generated data volume can be kept small and SQL tracing becomes a much more useful tool.

The first part of the article explains how a Java application can be instrumented to allow fine-grained tracing while the second part shows the administrative interface to enable tracing for specific modules.

Instrumenting the Application

First things first. Before the database can selectively trace for specific users or modules, the application must tell the database to which user or module an actual SQL statement belongs.

Luckily we don’t have to explicitly specify this for each individual statement. It’s more like a session property that can be set at any time. Once set, it affects all SQLs until a different value is set.

The three values that affect tracing are CLIENT_IDENTIFIER, MODULE and ACTION. Those must be properly set in order to allow selective tracing. With properly I mean two aspects:

-

Allow meaningful filtering

CLIENT_IDENTIFIERcan be used freely.MODULEandACTIONdepend on each other; it’s not possible to trace a specific action in every module. In other words, aMODULEis a container forACTIONS. -

Don’t introduce a performance impact

The Oracle database provides a way to set the

CLIENT_IDENTIFIER,MODULEandACTIONwithout doing an additional server round-trip. The information is sent piggyback with the next round-trip. You must take care to use the proper implementation to not introduce an additional server round-trip that would introduce a major performance impact.UPDATE: The issue about server round tips is also the topic of later article: Latency: Security vs. Performance.

The Required JDBC API

Since all of the described functionality is an oracle specific extension, the JDBC standard doesn’t provide an API to set the required attributes. To access that functionality you must cast the standard JDBC Connection to an OracleConnection object first. That’s all, the OracleConnection class provides the two methods we need:

The naming might confuse in the first place, but when you consider that the actual identifier for a real human being is intended to be put into the CLIENT_IDENTIFER field, it might become clear that this is really for end-to-end measurements.

The actual data is passed as an String array that contains an entry for the properties we are interested in —along with others. You can get all of them and provide new ones. Remember that neither getEndToEndMetrics() nor setEndToEndMetrics() causes a server round trip. In case you change any property, the change is sent piggyback with the next server round-trip. I have used the following class for my tests:

class OracleEndToEnd {

String oldData[];

OracleConnection ocon;

public

OracleEndToEnd(Connection con, String module,

String client , String action)

throws SQLException

{

if (con instanceof OracleConnection) {

ocon = (OracleConnection)con;

oldData = ocon.getEndToEndMetrics();

if (oldData == null) {

oldData =

new String[OracleConnection.END_TO_END_STATE_INDEX_MAX];

}

String[] metrics =

new String[OracleConnection.END_TO_END_STATE_INDEX_MAX];

int minidx = (metrics.length < oldData.length)

?

metrics.length : oldData.length;

System.arraycopy(oldData, 0, metrics, 0, minidx);

if (action != null) {

metrics[OracleConnection.END_TO_END_ACTION_INDEX] = action;

}

if (client != null) {

metrics[OracleConnection.END_TO_END_CLIENTID_INDEX] = client;

}

if (module != null) {

metrics[OracleConnection.END_TO_END_MODULE_INDEX] = module;

}

ocon.setEndToEndMetrics(metrics, (short) 0);

}

}

public void

endSection()

throws SQLException

{

if (ocon != null) {

ocon.setEndToEndMetrics(oldData, (short) 0);

}

}

}

This class is intended to be used as wrapper around a specific section of your code (not to say module or action) like this:

OracleEndToEnd oee = new OracleEndToEnd(con, "MOD1", null, "ACT1");

try {

selectIt(con);

} finally {

oee.endSection();

}

When done carefully, a hierarchical structure of MODULES and ACTIONS can be build. This code was of course only my proof-of-concept code.

To instrument your existing application, you should look out for central places where you can add the code as shown above. In example, many applications use a database connection pool explicitly. By instrumenting the pool and passing additional parameters—like CLIENT_IDENTIFER and MODULE—to the get()/borrow()/whatever() method, much of the overhead can be centralized.

The best way to instrument your application depends to the frameworks in use. That’s the time to mention that I am consultant, let me know if I can help you ;)

Performance Comparison

I have written an example Java implementation—on basis of the class presented above—to check the performance impact of a properly instrumented application. To make the long story short: in a networked environment there isn’t any considerable impact when properly instrumenting a Java application. In case the application and the database are hosted on the same node, if no network involved, the impact might become noticeable (8% in my experiment), but this was still an extreme case. Generally I believe that a proper instrumentation does not impact performance in a noticeable way.

For my performance experiment, I used a program that issued the same SQL statement many thousand times. To keep the execution time of the statement low, I have used a very trivial statement:

select 1 from dual

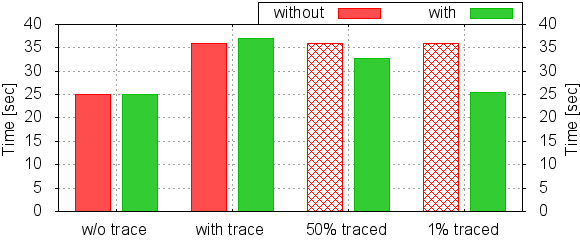

I have executed the program with and without instrumentation for the following scenarios:

- Without any tracing enabled

- With full tracing enabled (trace everything)

- Trace only every second SQL execution (only with the instrumented code)

- Trace only 1% of the executed statements (only with the instrumented code).

The results are in the chart in a networked environment—application and database hosted on different nodes:

There is NO measurable impact when tracing is disabled. This is the most important aspect. You can just add the needed CLIENT_IDENTIFIER, MODULE and ACTION and it will not harm performance—when done properly. When tracing is enabled, there actually IS a performance drawback. I am not sure where this comes from; I could imagine that the additional data written into the trace file—module name and so on—cause the regression . However, that impact is still minor, 5% in my extreme test scenario. For test case 3 and 4 there is no direct comparison because partial tracing requires the instrumented code. The checked bars are the results of the code without instrumentation but full tracing enabled—the only way to gather similar SQL trace files. You see that the performance impact relates to the traced volume. When tracing only a very small fraction of the overall code the impact is hardly measurable.

All of this requires a proper instrumentation of the application not to cause additional round trips.

The Administrative Interface

With Oracle 10g, yet another way to enable tracing is provided in form of the DBMS_MONITOR package. This package adds some great tools to perform SQL Tracing in a real world scenario.

Instead of

ALTER SESSION SET SQL_TRACE=TRUE;

or

ALTER SESSION SET EVENT='10046 trace name context forever, level 4'

we can now use

exec DBMS_MONITOR.SESSION_TRACE_ENABLE(binds=>true);

Which is actually the same in pink—except the less cryptic syntax—no benefit. Very often we want to enable tracing from outside the current session, which is also possible with the same procedure:

exec DBMS_MONITOR.SESSION_TRACE_ENABLE(sid, serial#, binds=>true);

Again, a nicer way to do what was possible before; with DBMS_SYSTEM.SET_EV. But now, you have only one package to remember: DMBS_MONITOR.

Sometimes we want to trace as session that will be opened somewhat later. For example, a batch job that runs at night. For this case the traditional solution was to create a SYSTEM LOGON trigger. The body of the trigger typically checks some conditions to decide to trace or not to trace. Very often, the decision is based on the user-name that is fetched via SYS_CONTEXT/USERENV or information from v$session. Many times, applied guesswork is added to the trigger. As a last resort, if it’s not even possible to trace all session that are opened during a specific time frame, it’s also possible to trace much more than needed and filter afterwards.

In a real world scenario, tracing everything is often not feasible because of the huge data volume created. Many people also argue that there is a considerable performance hit. Although I admit, and demonstrate below, that there IS a performance hit, it is usually negligible if neither waits nor binds are traced. The reason that tracing can’t be enabled globally on a production system, is the disk space requirement.

The DBMS_MONITOR Killer Features

The real power of DBMS_MONTOR isn’t that it provides a more convenient interface to enable tracing. The real power comes from

- the ability to persistently configure tracing

- the ability to perform fine grained, selective tracing

With DBMS_MONITOR, the need for LOGON triggers is gone. E.g., to enable tracing for all active and all future session for a specific service—like SERVICE_NAME in the connect descriptor—use the following:

exec DBMS_MONITOR.SERV_MOD_ACT_TRACE_ENABLE('SERVICE_NAME');

This will enable tracing for all current and future sessions with the specified SERVICE_NAME.

The second killer feature is the ability to perform selective tracing based on

- client identifier

- module

- action

These are session properties can be set the the application. When done properly, the application can change those properties at a very high frequency—for every statement—without introducing a considerable performance hit.

For example, if the application sets the CLIENT_IDENTIFIER to reflect the user ID of a websites user, tracing can be enabled for that particular user only. Similarly selective tracing on MODULE and ACTION level is possible. Consider the following activities happening in a session:

| Module | Action | statement |

|---|---|---|

| MOD1 | ACT1 | select * from … |

| MOD1 | ACT2 | insert into … |

| MOD2 | ACT1 | update… |

| MOD2 | ACT3 | delete … |

With DBMS_MONITOR.SERV_MOD_ACT_TRACE_ENABLE we can enable tracing for MOD1 only if you like:

exec DBMS_MONITOR.SERV_MOD_ACT_TRACE_ENABLE('SERVICE_NAME', 'MOD1');

The effect is as expected: only the statements attributed to MOD1 will be traced. Consequently you can drill down to trace only ACT1 within MOD1:

exec DBMS_MONITOR.SERV_MOD_ACT_TRACE_ENABLE('SERVICE_NAME', 'MOD1',

'ACT1', waits=>true, binds=>true);

This will trace only the insert statement, with wait and bind data. Similar possibilities exist for the CLIENT_IDENTIFIER:

exec DBMS_MONITOR.CLIENT_ID_TRACE_ENABLE('CLIENT_IDENTIFIER',

waits=>false, binds=>true);

Remember that all of this is persistent, even across database restarts. So we need to disable it again. Of course we will forget what was enabled, so there is a table to query for that:

SQL> SELECT trace_type, primary_id, qualifier_id1 ID1, qualifier_id2 ID2, waits, binds FROM dba_enabled_traces;TRACE_TYPE PRIMARY_ID ID1 ID2 WAITS BINDS ---------------------- ----------------- ------ ----- ----- ----- SERVICE_MODULE_ACTION SERVICE_NAME MOD1 ACT1 TRUE TRUE SERVICE SERVICE_NAME TRUE FALSE SERVICE_MODULE SERVICE_NAME MOD1 TRUE FALSE CLIENT_ID CLIENT_IDENTIFIER FALSE TRUE

With these methods we can enable tracing on a very fine granularity in a very convenient way. Since the tracing can be focused, space and performance issues are less problematic.

Résumé

Once an application is instrumented, fine-grained tracing can be applied very straight. Because of the reduced overhead and disk space requirements, SQL Tracing might become a more frequently used tool to troubleshoot poor performance.

You can choose the effort you put into instrumentation by yourself. For example, you might choose to set the MODULE only for one particular module that you need to trace right now, and don’t adopt other modules. In a step-by-step manner you can extend the instrumentation as needed.

During my experiments for this article I have found some traps you should be aware of:

-

The

ACTIONseems to consider only 8 characters

During my tests I noticed that the significant length of theACTIONseems to be only 8 characters (although documented as 32 bytes). However, any attempt to enable tracing for anACTIONlonger than 8 bytes failed on my 10g test machine. -

A wrong implementation might introduce a major performance penalty

Double check your implementation for that. -

SERVICE_NAMEmight be set to an unexpected value

Depending on the way the application connects,SERVICE_NAMEmight not be present so that it defaults toSYS$USER. If it doesn’t work on the first try, verify that the sessions you want to trace has the expectedSERVICE_NAME(select serivce_name from v$session....). Use the correct connect URL with the thin driver. - When using

DBMS_MONITOR, wait event tracing is TRUE per default

It’s not fatal to open your mind to new approaches.