I have witnessed a very short talk between a network engineer and a top-level manager at a client’s Christmas party. The network guy explained that the firewall adds about 0.2 milliseconds latency to each round trip between the application server and the database, which adds up to some hours in one particular case. So the manager asked what could be done and the network guy provided two solutions:

- Change the application to make less round trips

- Accept the security risk and don’t put a firewall in between those two tiers

Funny enough the network guy explained that the second option needs the managers signature because it bypasses the corporate security guidelines and somebody must take the responsibility for that.

Consider you are the manager, would you sign that a paper?

I guess I would not, and I also guess my client didn’t—otherwise it was against my advice.

I will explain the term latency in this article, provide some insight why it is so easy to build latency bandit applications, and show some ways to reduce latencies.

Weekend Shopping

The best explanation for latency I ever came across is from the guys over at RoughSea. There is a video that describes shopping like that (simplified):

- Drive to the super market

- Locate what’s needed (e.g., milk)

- Pay

- Store the item in the car

- Drive back home

- Store the item (e.g., fridge)

- Then start again for the next item on the shopping list (e.g., corn flakes).

The whole 20 minutes video is a great watch and covers many other frequent issues as well. If you just want to watch that particular scene, here is a direct youtube link.

It’s hard not to D’oh when watching this video. It makes it very obvious that most of the time is spent on the road. The shopping itself is only good for a rather small fraction of the overall process.

The network between an application and a database is very similar to the road in that example. Doing too much ping-pong between those two tiers will cause performance problems—no matter how fast the network is.

How Can It Happen?

There are two questions to consider:

- How can somebody implement software that way?

- How can a latency of 0.2 milliseconds make any difference?

First of all let’s look at the 0.2 milliseconds question. This value was provided by the network engineer as additional latency because of the new firewall. That’s on top of the latency the network had before, without the firewall. A typical latency for a switched LAN without firewall is about 0.1 – 0.2 milliseconds. If a firewall adds 0.2ms to that, the overall latency raises to about 0.4ms per round trip. In other words, doing 2500 round trips takes a second. That sounds a lot, but it isn’t. The problem is that there is hardly any reasonable task that can be accomplished with a single round trip.

A real world application needs to execute many different SQLs to complete a task. Even worse, a single SQL statement might need many round trips. E.g., a SELECT statement against an Oracle database needs two round trips at least. All of that sums up; an operation that executes 10 SELECTs will accumulate about 8ms latency. More complex applications might execute hundreds SQLs—that means 80 milliseconds of latencies. I have seen batch jobs that issue 50 million SQLs so that the round trip latency sums up to 10 hours!

So, why! Why is it done that way?

Because it is easier to implement. Therefore faster to implement and less error prone. Consider the shopping example again. The algorithm is very simple; just repeat all steps until the shopping list is done. This works—no matter how long the shopping list gets.

An improved variant of the algorithm—that is latency aware—might look like this:

- Drive to the super market

- FOR EACH ITEM: Locate item (milk, corn flakes)

- Pay

- FOR EACH ITEM: Store into the car

- Drive back home

- FOR EACH ITEM: Store the item (e.g., fridge, larder)

Please note that the one main loop of the original algorithm was split into three that are somewhere in the middle. The complexity has grown. Even worse, there are more complex error scenarios with the new algorithm. E.g., a very long shopping list might exceed the capacity of the car to transport all the items. These additional scenarios need special error handling in the program. Alternatively the algorithm could prevent that scenario by splitting up large purchases into multiple smaller parts—that are known to fit into the car. However, the additional complexity needs more time to implement and introduces more scenarios to test. The efforts explode—especially since the overflow scenarios are hard to test.

A word of warning: The case that your shopping doesn’t fit into your car might look very unlikely, and perhaps it is. However, in computer programs, there fewer natural limits than in real life—e.g., what your family can eat. From personal experience I’d say chances are 60% to hit this problem within the first year in production.

After all, there is a trade off between software complexity and software performance. From another point of view, it’s a trade off between software development speed and software runtime speed. Imagine you are a developer who is pushed to deliver working software very soon—which algorithm would you implement?

Finally, there is also a reason why latency bandit applications can be produced by accident:

But it works in development/test environment

Development takes often place on desktop hardware where everything is installed on one computer. Obviously, there is no firewall in place nor is there any network involved. The latency in these development deployments is only a fraction (e.g., a 10th) of the latency involved in a multi-tier production environment. Taken that to the shopping analogy would mean that the super market is in the room next to the kitchen. However, this kind of private shopping mall is not yet commonplace.

How to Avoid it

So, let’s look into the more technical part of the story. Which techniques can be used to reduce the number of database round trips? The following list shows the three most effective ways to avoid unneeded latency:

- Use Joins

- Use array/batch functionality

- Use advanced techniques (open cursors, fetch buffers, bulk/implicit commit, …)

Join

A common anti-pattern is to issue a select statement for each row fetched from another select. I refer to this method as nested select as opposed to a nested loops join. Both methods implement the same idea; fetching data from table B for each row in table A. However, nested selects introduce additional round trips. The most important technique to avoid latencies is therefore to tell the database what data is needed with a single SQL statement.

The nested select anti-pattern can be applied to any extend. They can be nested several levels deep, or kept at one level that triggers more than just one nested select. I have mentioned the 50 million SQL batch job that took more than 10 hours—just for latency. This particular job has queried some 10 additional child tables for each row fetched from the main query. The main query returned some million records so that the latency became the most dominant performance factor.

There were several organizational and technical reasons for the developers to implement it that way. First of all, there was a corporate coding guideline that forbids to write SQL if there are existing functions that can deliver the data. Another problem was that there was a dependency between the main table and the nested selects; the tables to be queried in the nested selects were determined by values from the main query. However, there were only three different cases, the best solution was therefore to make three different SQLs. In case a single result set is needed (e.g., because of a order clause), a UNION can be used.

After all, 10 out of 12 hours overall run time was saved just by using joins.

Batch Execution

The second major latency reduction technique is to utilize batch execution—also known as array execution. Many databases provide a way to execute more than one statement in a single round trip. However, there are APIs that don’t expose that functionality to the developer. Although JDBC does there are still some pitfalls:

-

Error handling is terrible.

Although the API defines methods to identify which statement failed, the API is very cumbersome. To make things worse it’s not even defined if the execution of the batch stops upon errors. My Best Current Practice is to set a Savepoint prior array execution. In case of error, a rollback to the Savepoint can be done.

-

Very large batches.

Very large batches can have their own problems. As with the shopping example, every implementation must be prepared to use multiple batches in case the number of statements becomes very high.

-

Does not always work faster.

The actual performance gain is vendor specific and, more importantly, depends on the method used. For example, the Oracle database benefits from batching only if

PreparedStatementsare used. BatchingStatementobjects doesn’t give any performance gain. On the other side, MySQL can also benefit fromStatementbatches. As always, the advice is to usePreparedStatementswhenever possible. This will almost always result in major performance improvements. -

The JDBC API is optional.

You must check if the driver supports the API. Realistically I would suggest to build an abstraction that takes care of that and executes the statements individually if batch execution is not supported. Ideally the JDBC driver would do so.

These are other important issues—that cause considerable efforts—to take care of when using batch execution. Still all the efforts can easily pay off. In many cases the execution of a PreparedStatement batch with two elements takes no longer than the execution of a single statement, so that the execution is effectively twice as fast. For trivial SQL statements, a speed improvement of factor 10 is within easy reach. That justifies some efforts.

Advanced Techniques

There are many more ways to reduce latency. They can be classified in two different types: SQL improvements or API improvements.

Let’s start with SQL improvements.

-

Join.

Again, just as a reminder. The most powerful way to reduce latencies.

-

INclausesConsider to use an

INclause to fetch multiple records form the same table in one run. Unfortunately, the SQL language doesn’t guarantee that the order of the result sets corresponds to theINclause order. An additional layer (e.g., HashMap) might be used to handle that issue. Another potential problem is that the number of items in theINlist has a maximum—1000 in Oracle: ORA-01795. However, if the list can grow that big, chances are good that the list itself was retrieved from the database. In that case, a join might be the better solution—just to mention it once more. -

UNIONIf data from two different tables that have the same structure is needed, you can use a

UNIONto fetch both at once. For example aCURRENTand aHISTORYtable might contain the life-cycle of a row. To obtain the current and all past versions, both tables must be queried. Another examples are pre-calculated aggregates; one table contains all the aggregates as of yesterday and a second table all the new records since then. To get the total figure—as of “now”—both tables must be queried. -

RETURNINGclause.You must often perform two steps to use a generated key from a sequence for an insert; select the new key and the insert the row. The Oracle database’s solution is the

RETURNINGclause of theINSERTstatement. Although the JDBC API acknowledges the problem since version 3.0, the Oracle implementation is not very useful—it returns theROWID. A workaround is available. -

1×1 Cartesian product or

CROSSjoinThe so-called Cartesian product (everything with everything) is sometimes used accidentally and can result in huge result sets. That’s the reason why SQL-92 introduced a distinct keyword for it:

CROSS. On the other side, the Cartesian product of two single row statements is, again, a single row:SELECT a.*, b.* FROM a CROSS JOIN b WHERE a.id=1 AND b.id=2This technique can be used to combine multiple single-row statements into a single server round trip. Please note that this works only if both statements return exactly one row, not more, not less. This is more often an option of last resort than a first choice.

These are the typical SQL based techniques to reduce the number of SQLs executions to decrease latency. Another strategy to reduce the number of round trips is to use the API differently:

-

Batch execution.

Sorry for the repetition, but it’s the most important technique.

-

Open cursors

The same

PreparedStatementinstance should be used if the same SQL statement is executed multiple times. The first execution of aStatementinvolves an additional round trip to open the cursor. This additional round trip can be avoided when the samePreparedStatementinstance is reused. As a side effect,PARSINGoverhead is also reduced (see also: Parsing Overhead Explained).However, the number of open cursors has a hard limit; don’t forget to close them when the work is done.

-

Increased PreFetch

The network layer of the Oracle database utilizes a technique called PreFetch to reduce round trips for

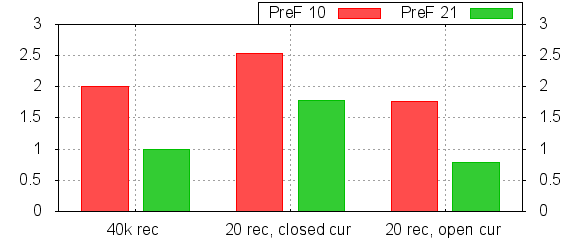

SELECTstatements. The individual rows are not transferred one-by-one but a certain number or rows is sent in one round trip. The Oracle client library handles this PreFetch transparently and still provides one row after each other. ForSELECTstatements, PreFetch is almost as powerful as batch execution forINSERT/UPDATE/DELETEstatements and can reduce the latencies by an order of magnitude for large result sets. The current default for the Oracle JDBC driver is to fetch at most 10 rows in each server round trip. The JDBC standard provides a way manipulate this value: Statement.setFetchSize(20); If your application typically fetches 20 records, you might set the value accordingly. There is no (notable) harm for statements that return less than 20 records.UPDATE 2010-01-29: This paragraph was updated to reflect my later findings regarding Oracle JDBC PreFetch Portability.

The following chart shows various select executions with different PreFetch sizes. The first pair selects 40000 rows from the database. Fetching twice as many records in one round trip, cuts the overall time down to the half. The other figures represent the execution of a statement that returns 20 rows. Those statements were executed 1000 times in a row to get reasonable values. The repeated execution allows the open cursor optimization to be applied as well. The middle pair of bars shows the execution performance with a PreparedStatement that is closed after each execution. The last pair shows the same but the PreparedStatement was opened only once and used then for all 1000 executions.

The latency difference between 10 records PreFetch with a “closed cursor” to the 20 records PreFetch with an “open cursor” is 70%.

Please note that the statement used for this statistics was very trivial and can be assumed to be executed instantly. The improvements visible in the chart above do therefore indicate the maximum possible improvement.

The Bottom Line

Increasing network complexity introduces additional latencies that might affect applications performance.

There are many ways to reduce server round trips to make an application less sensitive to network latencies. Most of them involve revised process flows and increase application complexity.

“Firewall qualified” applications have an competitive advantage and attest awareness for complex production deployments.

It’s not fatal to open your mind to new approaches.

How Internet Works

There is another excellent cartoon that explains latency in context of the Internet.